VidiStream [C ARC]

Introduction

The VidiStream application and its core functionalities are described in detail in Multimedia Content Delivery with VidiStream [C ARC]. Instances of VidiStream serve one or more of the following different purposes which are called modes from here on:

stream:

serve multimedia files unprocessed as DASH segments via the/dashendpoint without de- and encoding themrender:

serve DASH segments via the/dashendpoint which represent the rendered result of (potentially complex) timeline definitions by decoding the source(s) and mixing them on-the-flytrickplay:

provide the proprietary, non DASH-compliant trickplay API at the/trickplayendpoint for consumption

The following table gives an overview on the various aspects of the three modes of operation before going into detail regarding their differences.

Mode | stream | render | trickplay |

|---|---|---|---|

Purpose | On-the-fly DASH packaging of individual files without processing them | On-the-fly DASH packaging of timeline segments involving decoding and encoding of potentially multiple sources | Serve individual video and/or audio frames as fast as possible |

Used when? | Streaming without requiring following additional features:

| Streaming with need to enable one or more of these additional features:

| Need for very fast random access to individual video and/or audio frames but not for a linear playback |

Used where? |

|

|

|

Resources |

|

|

|

Users per instance | ~ 150-200 | ~ 30-50

| ~150-200 |

Loadbalancing Strategy | Bounded-load Consistent Hashing | Consistent Hashing | Consistent Hashing |

External Endpoints |

|

|

|

Internal URL structure |

|

Timeline is in |

|

Kubernetes Architecture

The following sections give an overview on the overall VidiStream architecture when hosted in Kubernetes.

Separation of Modes in Kubernetes

As shown above there are different aspects to all three available modes of VidiStream which justify treating them distinctly. But foremost, the most important distinctions are resource and load balancing requirements.

Resource Requirements

Since the rendering functionality has much higher requirements on resources than the other two modes it shall be independently scalable on dedicated hardware matching the high resource requirements in a productive setup.

Load Balancing Requirements

When looking at the aspect of load balancing on the highest level there may exist any number of VidiStream instances which have access to the same set of arbitrary storages. Client requests for streaming are then directed to one VidiStream instance which in turn retrieves the requested file(s) and delivers the content.

Scenarios

Unlike other application scenarios, there are additional aspects to consider for streaming due to the imposed physical file access. Most importantly, each access to a file comes with the overhead of opening the source file and retrieving metadata about it such as headers and index positions. Once opened, an instance can very efficiently read bytes from the file but unnecessary opening of files is to be avoided.

The following typical scenarios can be distinguished further:

individual clients each requesting mostly distinct files for front-to-end linear streaming:

typical scenario for browsing use cases

access to each individual file shall be handled by one dedicated instance for the file at a time for improved performance, relieving other instances from the unneeded additional file access overhead

technically each server can access any position in any file so this can be seen as a generally stateless scenario, however strongly favoring the fixed association of server instances to opened files to reduce latency and overhead

multiple clients requesting the same file for front-to-end linear streaming

in general it is also desirable and efficient to have one instance serve multiple clients for the same file due to the reduced file access overhead

if the number of simultaneous clients however reaches a threshold it can cause degradation in quality of service due to overloading individual VidiStream instances.

at this threshold it becomes favorable to route requests away from the main instance

ideally excess requests should be directed to a fixed secondary instance which then also opens the file for reading

client streaming quality should be impacted only slightly in this overload case

individual clients working with timelines and requesting to render them where the source material in the timelines is mostly exclusively used at any given time

to work efficiently in terms of caching and latency it is best to form a 1:1 connection from client to VidiStream instance

a session needs to be created to achieve this

individual clients working with timelines and requesting to render them where the source material in the timelines is mostly accessed simultaneously at any given time

the most complex use case which is currently not optimized and treated same as scenario 3.

clients wants to scrub or browse in the most efficient manner through a single file each

DASH streaming can only deliver a group of frames and not single frames making it inefficient for this use-case

to maximize caching effects and reduce access time the client needs to be directed to the same VidiStream instance which is already accessing the file via the trickplay API.

Client-side load balancing

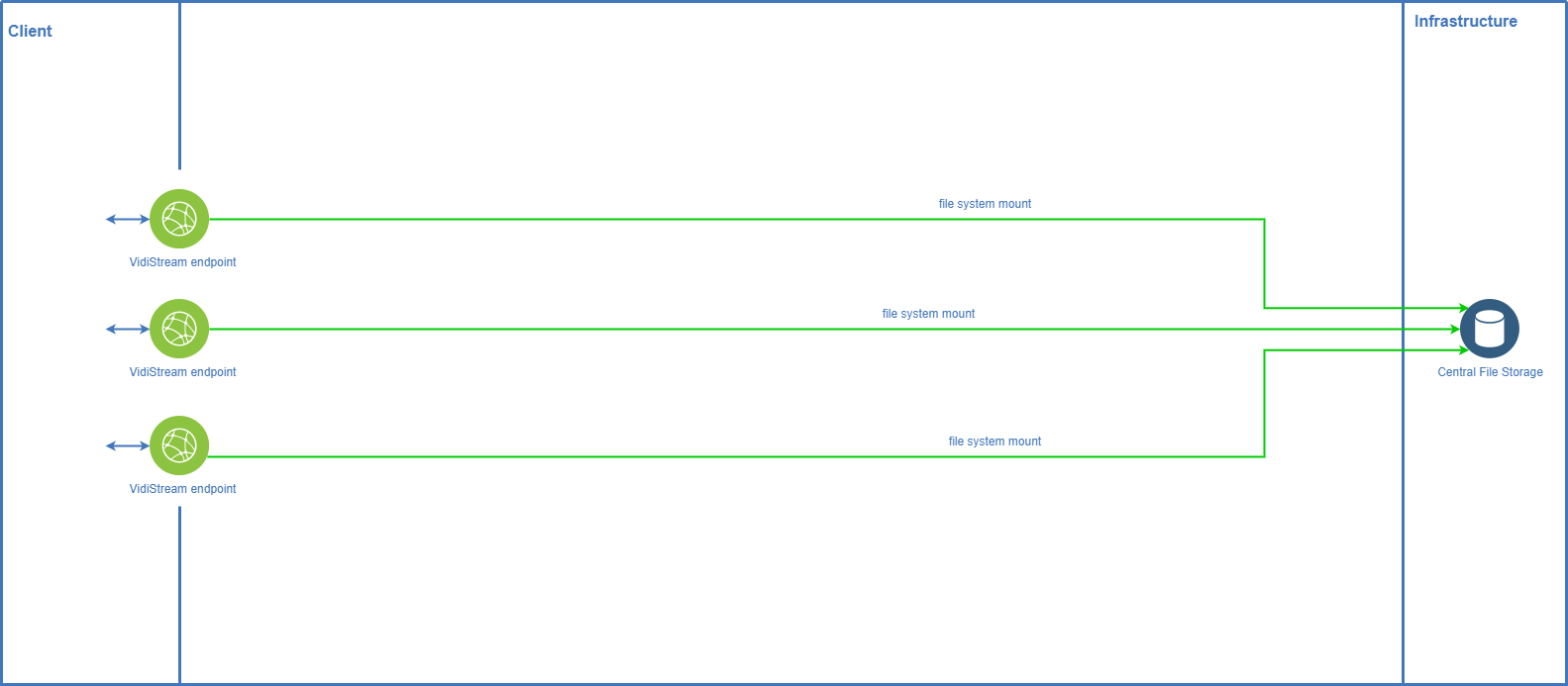

In the worker-based VPMS system there exists a client-side load balancing where the client applications are aware of all existing streaming endpoints and select them in a round-robin manner. Once a session with a single endpoint is established it is held until the connectivity to the endpoint is lost and then the next endpoint is contacted:

This model lacks several demands that arise from the use cases above. While it does enable to have sessions to VidiStream instances it does not select streaming endpoints efficiently since the client has no knowledge of the server side instance load at any time.

Server-side load balancing

To better match the demands of the use-cases highlighted above, the Kubernetes hosted VidiStream architecture uses a server-sided load balancing concept. Instead of the clients selecting amongst available endpoints, in this model one central endpoint is available which clients contact for streaming requests and which determines the best suited load balancing strategy for the request.

This endpoint is realized as a Kubernetes Ingress. The ingress controller responsible for the endpoint then is responsible to appropriately distribute the stream requests to the internal VidiStream instances running in the Kubernetes cluster.

Load Balancing Strategies

Considering scenarios 1 to 5 it becomes obvious that there use cases strongly favoring a session between client and VidiStream instance. Establishing a fixed 1:1 connection between clients and VidiStream instances is optimal when:

rendering a timeline in render mode due to caching effects (scenarios 3 and 4) and the relatively large overhead of multi-source processing compared to simple DASH segmentation without processing

when entering trickplay mode to have the absolute maximum access speed to individual frames of a single file (scenario 5) by eliminating the file open overhead on multiple VidiStream instances

In the stream mode, however a 1:1 connection between VidiStream instance and file access is favorable only up to a certain amount of parallel accesses to the file (scenarios 1 and 2). Preventing to overloading instances then is more desirable than keeping the same connection established at all cost since in this case even though file opening is reducing performance it is still justifiable.

Note that the ability to create new sessions to a backend VidiStream instance in case an existing one abruptly fails must be present in all clients and modes. Client applications must not fail permanently to work (requiring re-login or restart) in case of a server becoming unavailable. A longer reconnection time deteriorating the user experience might be acceptable in the event that a VidiStream instance becomes (temporarily) unavailable.

Session based strategy and Consistent Hashing

While it would be technically possible to work with HTTP based sessions to establish a session between client and VidiStream instance, this would neglect the fact that individual file accesses shall be bundled by dedicated VidiStream instances. Given two clients requesting the same file, they are likely to get HTTP sessions with different VidiStream instances if the load balancer routes in a round-robin, random, connection-count based or any other more generic fashion. While each client will stick to his HTTP session and hence VidiStream instance to access its file the file opening overhead is unnecessarily doubled.

The unique way to establish a 1:1 relation between VidiStream instance and accessed files is to base the load balancing on the clients request URI for a file to stream in stream mode. Fortunately the same approach also is usable to establish 1:1 sessions between clients and VidiStream instances in the session based scenarios. Obviously the routing of requests to VidiStream instances can not be based on the complete number of URI segments present but only on the given depth of segments that form the stable identifiers:

Mode | stream | render | trickplay |

|---|---|---|---|

URI Style |

|

|

|

Depth | 3 | 3 | 3 |

Explanation | The URI is stable for request to an individual file because the URI segments contain the Base64 encoded path to the file | When a client initiates a render session, an URL containing a random and unique session identifier is initially created and used for the remainder of the clients rendering session. Timeline data is posted in the body of the request. | The trickplay API was built to also include a session UID in the path making it a URL with several levels of constant segments addressing the unique session |

In order to guarantee a strict session binding between client and VidiStream instance the Consistent Hashing algorithm is applicable. In a simplified sense, with Consistent Hashing the source value (here the URI segments of the given depth forming the stable endpoint) is subjected to a hashing function that consistently associates the source value with one of n target values (here the VidiStream instances available). If n changes the algorithm aims for reducing any re-targeting as much as possible.

As of now, only ingress controllers based on HAProxy support URI-based load balancing based on the Consistent Hashing algorithm. Therefore the load balancing solution is built around the HAProxy Ingress Controller which proved to be the best supported and most stable HaProxy implementation as an ingress controller.

Session based render and trickplay modes can most appropriately be covered with the Consistent Hashing loadbalancing method because the sessions need to be stable at all times. Any session loss will cause the client to reestablish the complete session and in the very least produce longer waiting times. Clients need to consider this session rebuilding case but it is generally to be avoided for sake of performance.

Please note that due to the uni-directional hash function the server side load is not considered by this algorithm. It is assumed that sessions are equally distributed amongst VidiStream instances and have an equal resource consumption but in unfavorable scenarios a VidiStream instance might be overloaded, hence the need for a proper reconnection mechanism exists.

Sessionless strategy with Bounded-load Consistent Hashing

The stream mode has no such hard requirement on a session affinity. An optimal solution for this mode would be capable of dealing with the problem where VidiStream instances are overloaded due to too many clients requesting the same file(s), hence taking the actual individual server loads into account. A stable session for clients should be aimed for but not at the cost of overloading instances.

As one of the major video streaming portals, Vimeo was faced with identical problems of optimizing the access to video files. Links to videos in Vimeo also are stable (in essence an URI with the Video ID at the end) and the same problem applies on an architectural level. The goal of Vimeo is to fully utilize caching servers that already have segments of files cached when the same video is requested simultaneously.

Vimeo engineering proposed and implemented a solution based on Bounded-load Consistent Hashing into HAProxy. The highly interesting details can be found here: https://medium.com/vimeo-engineering-blog/improving-load-balancing-with-a-new-consistent-hashing-algorithm-9f1bd75709ed

To summarize, the Bounded-load Consistent Hashing algorithm follows the same strategy as the Consistent Hashing algorithm but at the same time additionally measures the distribution of the total load to each active instance at any time. When exceeding a configurable relative instance load (the “range of acceptable allowed overloading”) the algorithm routes traffic to another instance while the overloading is taking place. The speciality of the algorithm is that the instance that is targeted next in case of overload is again stable, meaning that excess traffic is not scattered around but at most two instances serve the same file then, making it an efficient solution to the problem.

Consequently, when serving in stream mode the Bounded-load Consistent Hashing algorithm is utilized.

Seperation of concerns

Due to the different demands on each mode that were highlighted it is required to deploy individually scalable Kubernetes deployments for each individual VidiStream mode in productive scenarios where many users need to be served. This is the default setup that comes with the products Helm chart.

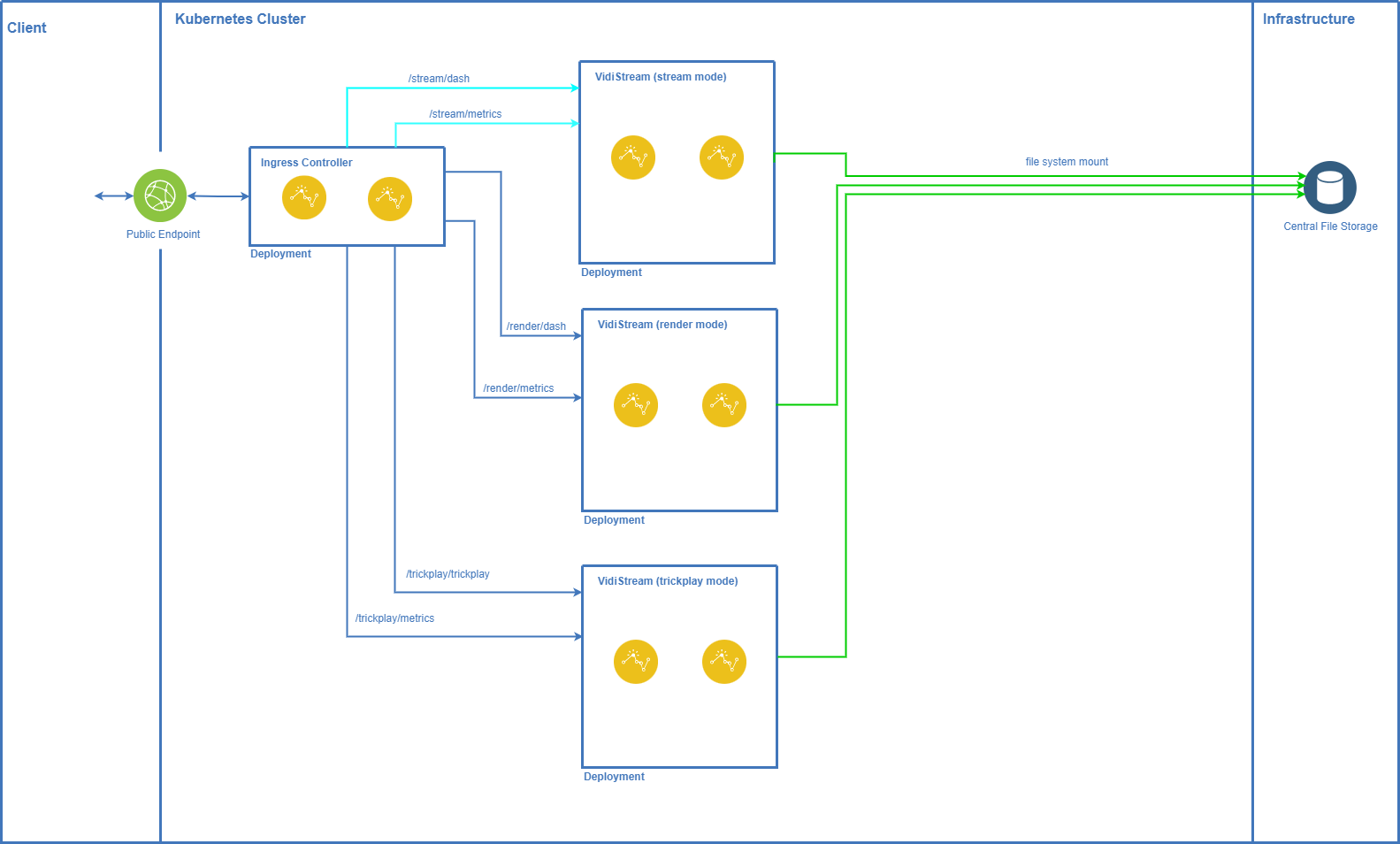

The following diagram visualizes this productive setup of three separately scalable VidiStream deployments based on their mode:

However, for test systems you can have a single deployment provide all three modes of operation. This is possible because all endpoints are distinct from each other and a single deployment can alternatively be configured to serve all modes.

VidiStream and Clients Hosting

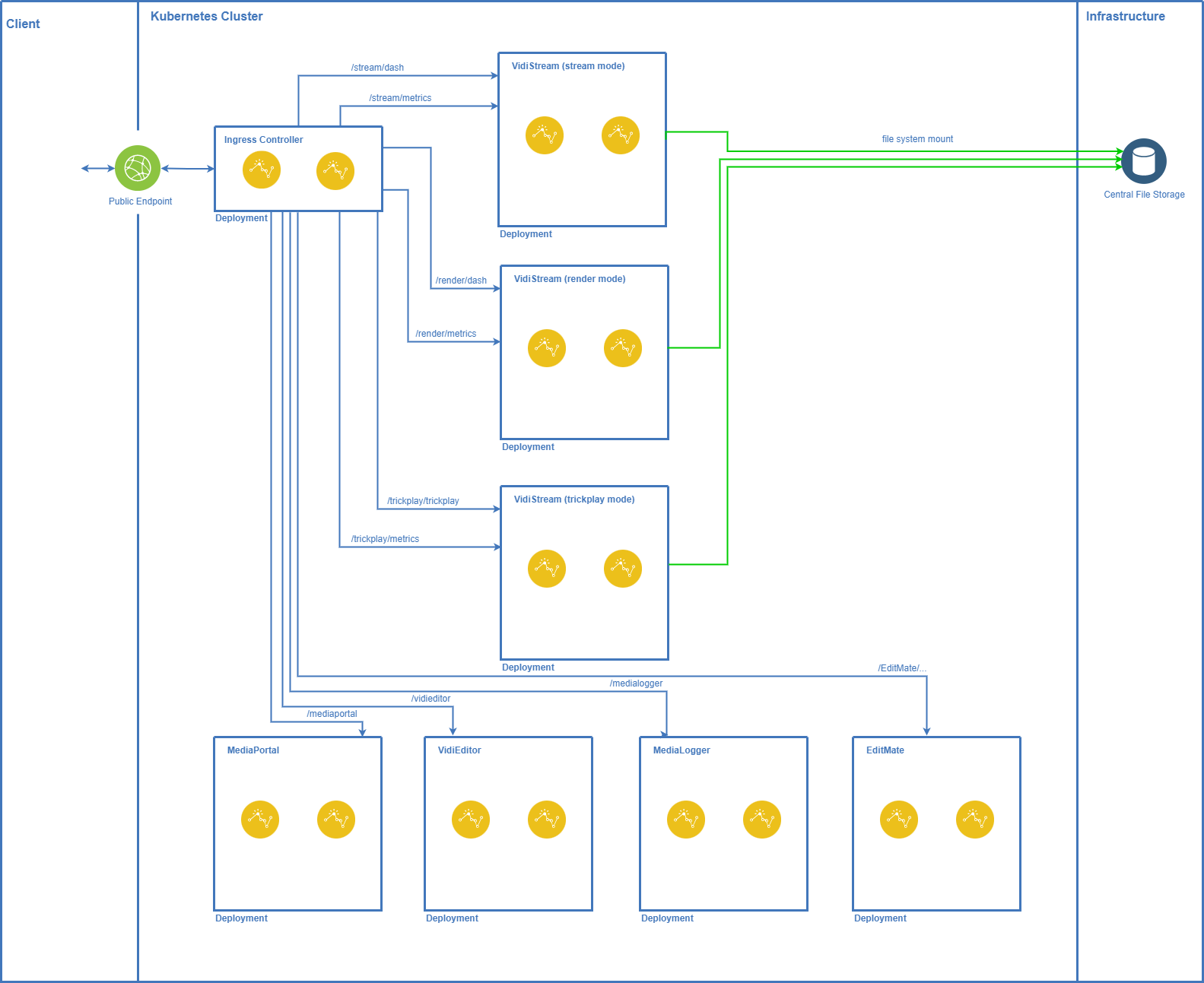

When running VidiStream in Kubernetes it is crucial to also consider the clients that depend on VidiStream in the overall architecture.

The overall client/server hosting concept for Vidispine applications that communicate with VidiStream is to host all clients depending on VidiStream and VidiStream endpoints on the same ingress endpoint/DNS address. Routing to client and VidiStream endpoints is handled via subpaths on the same ingress host.

Notable advantages of this approach are:

no CORS problems since all communication happens over reverse proxy host

cheaper setup with only one load balancer and certificate (also in cloud)

only one ingress controller in use (less complexity and reduced maintenance)

less complexity in product setups (only ingress needs to be provided per client, no controller)

different ingresses can be combined with HAProxy for one controller (same as NGINX)

uniform structure for accessing client applications at

<host>/<client_app>that can be communicated to customers and is consistent over all enterprise installations

The following table summarizes all routes that shall be available at the same DNS address/hostname (depending on whether the client is a part of the system or not):

Application | Routes |

|---|---|

MediaPortal (21.1 or newer) |

|

MediaLogger |

|

EditMate |

|

VidiEditor |

|

VidiStream |

|