Bringing Browser-Based Video Editing to the Next Level - 29/12/2023

By Matthias Peine

Published on

Video Editing in the browser? Why? Or better, why not?

For decades, video editing has been the type of job that requires heavy lifting. The type that assumed strong client PCs with special non-linear-editing (NLE) software being installed on the machine on top of having files on the local machine or direct access to them. But it being 2023, we found this not to be necessarily the case and thought a review was overdue. We, at Vidispine, believed that it should be possible for many editing tasks to be done simply on a web page without client-side installations or particularly powerful hardware. That’s why we built VidiEditor, our browser-based editing solution tailored for journalists and content producers (learn more about VidiEditor here: VidiEditor Documents (vidinet.net)).

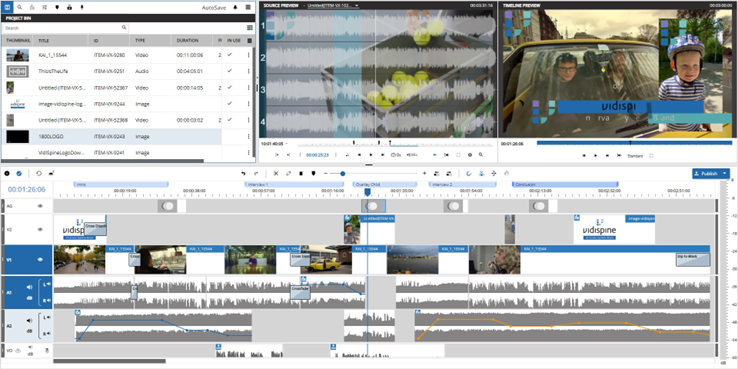

VidiEditor: Vidispine's browser based video editing application

We call it a MAM!

While one may be inclined to compare traditional NLE’s, such as Adobe Premiere, Finalcut or Avid, to Vidispine’s VidiEditor, with those player’s focus set on editing in the most advanced stages of production, VidiEditor concentrates on easier, more comprehensive editing tasks done by everybody while taking advantage of being part of the Vidispine media asset management system (MAM) and its deep integration to the customer's system environment.

Vidispine’s approach to dealing with MAM systems also tackles the challenge of handling a large amount of users being served with media located centrally inside the server infrastructure, as well as enabling media to be easily shared among users. Usually, this task is fulfilled by using streaming technologies or downloading the full media to the client as a working copy. Both having their own set of drawbacks and advantages as we will see later.

Yes, this technology thing matters!

When we started developing VidiEditor back in 2018, there were basically two main viable approaches when came to doing browser-based video editing. Either downloading the media to the client along with the disadvantages of users having longer waiting times on longer media, limitations when dealing with growing files, needed disk space on the client machine, and let us not forget the accuracy of playback when presenting the media on the web page. Last but not least, the calculation of video effects must be performed on the client machine somehow.

Using streaming technologies such as Netflix or Amazon Prime seemed to be the better fit when dealing with the context of MAM systems. It does however bring up the question of how video effects, transitions or audio adjustments are calculated and how much hardware is needed doing those tasks server side. Additionally, a design of this kind results in a flow where each user interaction requests a new consolidated media stream from the server, along with generating a lot of recomputing of effects as well as a lot of network traffic needed to transfer the composed video stream to the application.

As a result, both approaches are possible, but do not seem to be optimal for editing where media modifications are made frequently by the user and where an higher amount of users is served with the same media.

The wheel goes round and round….

The game changed due to the fact that modern web browsers get more powerful year by year. Since end of 2021 web browser API’s allow having access to individual media components directly. In our case especially to access each video frame individually and allowing to modify it as wanted.

With this an idea was born: Use DASH streaming to serve the users centrally, but allowing the client PC to do the computing work.

The benefits implied are as listed:

Drastically lowering the needed bandwidth and with that the carbon footprint.

Providing a user experience with nearly no latencies when working in the web GUI.

Lower the total cost of ownership for customers while reducing the needed server hardware.

Usage of better proxy quality depending on the used client hardware.

Enable the possibility of using local files mixed with centrally stored files.

Being able to calculate the timeline effects and media mixing inside the web browser

Building a solution that allows one to serve a high amount of users with streaming while avoiding long waiting times and downloading completed media requires additional web technologies.

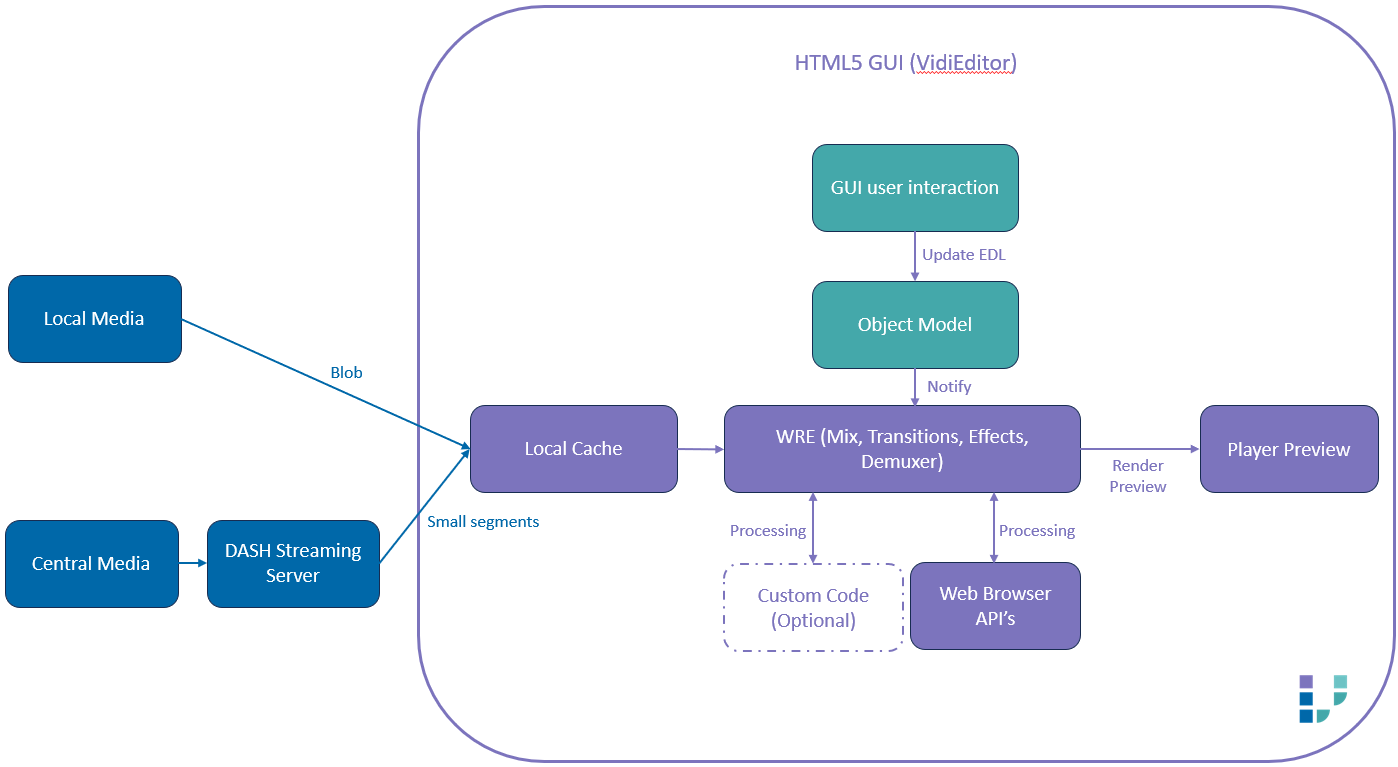

Figure 2 Web Render Engine High Level Architecture

The WRE comes as an NPM package making it easy to integrate into a HTML5 frontend. It can either load local files as blob or can be fed with MPEG DASH streams, which is the more typical case in the field of MAM systems. Smaller DASH segments of the media are requested from the streaming server to avoid longer waiting times for the user. As soon as a DASH segment is downloaded once, it is cached locally using the browser's cache storage. As a result, once used media is reused without transferring it again over the network resulting in no waiting time and a reduced network traffic.

It should be mentioned that the client side media doesn’t need to be managed by the user as the solution manages how much data is cached for how long on the client. If thresholds are reached, the cached media is emptied and the media is requested from the server again when the user accesses it after client side deletion.

As soon as the media is available on the client, all the video and audio processing can be done on the client side using the client PC’s hardware. The backend only needs to deliver the media as DASH segments. This has the benefit of making it independent of user interaction and it becomes predictable how many resources are needed server side.

For a fast user experience without noticeable latencies, a challenge then is to deal with the user interactions (trim, split, effect changes, audio levelling etc….) that are made by the user in the timeline GUI. It is expected that those reflect in the timeline preview on the fly. Therefore an object model was implemented which deals with this situation so that the frontend application updates the EDL generated by the user resulting in a notification of the WRE to create the preview directly.

Wait. I no longer need a powerful PC?

Obviously, the solution is not independent from the client machine used. It was always our goal to let users do video editing while using a standard office PC. The question about what client PC is needed basically depends on 3 factors:

What video specifications are used (Resolution, Frame rate, Bitrate etc.)?

How much media must be previewed at the same point of time?

What is the actual hardware usable on the client machine?

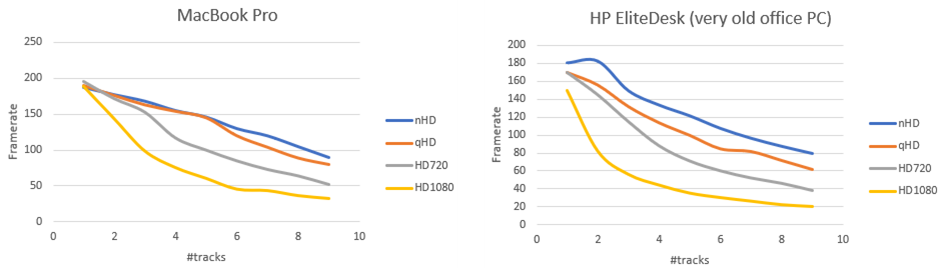

So two measurements should be given where our approach was evaluated against especially a bit older hardware in the first step of implementation. Lets use the two following machines as an example:

Computer | HP EliteDesk 800 | MacBook Pro 2019 |

CPU | Intel Core i5-4590 4C/4T 3.30GHz | Intel Core i7-9750H 6C/12T 2.60GHz |

GPU | Intel HD Graphics 4600 | Intel UHD Graphics 630 |

Decoder | D3D11 | VDA |

One also assumes the use of a standard proxy video in H.264 codec and in different resolutions. In below graphs the media abrevation has following meaning

nHD: 640x360px, 25fps, H.264

qHD: 960x540px, 25fps, H.264

HD720: 1280x720px, 25fps, H.264

HD1080: 1920x1080px, 25fps, H.264

Using those two machines, we simulated how many parallel videos could be decoded at a time on those client machines. In simplified way, this indicates how man videos can be seen at one timecode position by the user. Seeing the given results of measurements, it gets present that both machines could decode full HD media in multiple tracks. The Mac Book did nine parallel tracks with decode speed of 32 fps while the EliteDesk PC was able to use seven tracks at a speed of 26 fps. Taking 25 fps as the lower boundary for playback this would mean that this amount of parallel videos would be possible on those machines with real time playback.

Figure 3 Measurement of video performance in WRE PoC phase

Assuming a user creates a picture in picture situation on top of another video this would mean they would need three parallel tracks as shown in the figure below:

Figure 4 Example situation of 4 parallel video tracks in WRE

Taking this as a reference, editing tasks typically done by journalists and content producers should be possible even on what we defined as normal office PC’s above and with FullHD media. Pushing the possibilities up while using a lower resolution of a video format or using a stronger client PC is of course a possibility.

What’s the best solution?

When comparing the different possibilities for editing that are typically present, broadcasters give the following comparison.

| Traditional NLE (Fat Client) | Special MAM editor & file access (Fat Client) | Download used natively in HTML (Web App) | Server Side Rendering (Web App) | Web Render Engine (Web App) |

Pro |

|

|

|

|

|

Con |

|

|

|

|

|

While most professional cutters, having the suitable hardware and a suitable file access, tend to go with an NLE with all the possibilities for editing at their hands, many tasks for editing are not as complex or as demanding as making a movie or a documentary. It is often the case that users doing those tasks are not cutters with the detailed experience on all the possibilities and technical details. For these users, a browser-based solution may be far more suitable.

Going for a fat client if it’s not an NLE probably makes only sense when regarding integration possibilities in a very specific environment.

When looking at the browser-based approaches you have two aspects. On one hand, downloading full media and presenting it without usage of Web Codecs has limitations on latencies and the accuracy of playback in a timeline situation. Other factors that matter are the needed network bandwidth and available disk space on the client machine.

On the other hand server-side rendering and streaming to the client seems to be mostly seen on the market. The biggest problem here is the needed hardware server side and especially the difficulty of predicting needed hardware as this depends a lot on the made user interactions.

The newly designed Web Render Engine combines the advantages of being accurate while having a quite small and predictable footprint inside the server infrastructure and allowing a fast user experience in comparison to any other browser-based approach. A drawback is that the client PC is introduced into the equation. However, we have shown that a standard office PC should be suitable for the job.

What’s up and what’s next?

The complete WRE approach was established for files in codecs of H.264 and AAC as this is typically used in the field of Vidispine MAM systems as a proxy format. It got fully integrated into our own browser based VidiEditor that can do several tasks with client side rendering such as:

Multi track editing

Video and audio transitions

Video effects (blur, mosaic, position, scale etc….)

Audio leveling and routing

Voice Over recording

Sample rate conversion

Keyframing of video and audio effect parameters

Animated graphics

Subtitling / Closed Captions

Usage on the customer's end showed already a much faster workflow for users while also improved scaling possibilities. Also the newly added Web Render Engine approach offers great possibilities for future developments such as offline work mode, developing into direction of a progressive web apps, adding more advanced video effect functionalities, or simply download the preview video as a file directly from the web browser. Last but not least the WRE design also allows to integrate custom code resulting in a broader support for a variety of file formats or the possibility of performing specific processing tasks.

Want to know more about VidiEditor or usage of Web Render Engine? Reach out to us!

About the Author

Matthias Peine works in the media industry for over 12 years in various roles. At the moment he acts as Product Manager for Vidispine especially in the field of browser based video editing as well as for transcoding and streaming technologies where above presented approach got implemented end to end.