Face Training and Analysis Powered by DeepVA Setup and Configuration [VN UG]

For this guide we assume that you have a VidiCore instance running, either as a VaaS running inside VidiNet or as a standalone VidiCore instance. You should also have an Amazon S3 storage connected to your VidiCore with some video content encoded as mp4.

Configuring a callback resource

To be able to run the Face Training and Analysis and detect known and unknown faces from your video content you need to assign a S3-storage that can be used as a callback location for VidiCore to use for resulting data returned by the analysis.

The result from the training and analysis service will consist of images and metadata and that will be temporarily stored in the callback resource together with accompanying JavaScript job instructions for VidiCore to consume. As soon as the callback instructions has been successfully executed, all files related to the job is removed from the callback resource

This resource could either be a folder in an existing bucket or a completely new bucket, assigned only for this purpose.

Important! Do not use a folder within a storage which is already used by VidiCore as a storage resource, this to avoid unnecessary scanning of the files written to the callback storage.

Example:

POST /API/resource<ResourceDocument xmlns="http://xml.vidispine.com/schema/vidispine">

<callback>

<uri>s3://name:pass@example-bucket/folder1/</uri>

</callback>

</ResourceDocument>

This will return a resource-id for the callback resource which is to be used when running the analysis call.

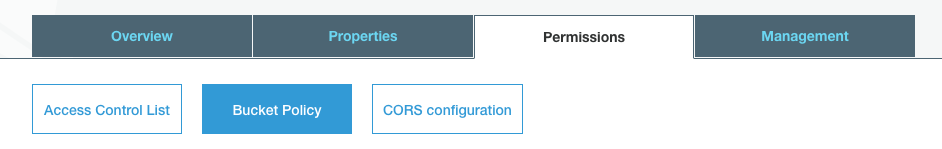

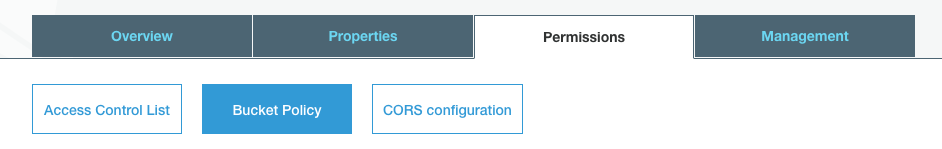

The S3-resource must also be configured to allow the cognitive service to put objects in the bucket. Attach the following bucket policy to the S3-resource

{

"Version":"2012-10-17",

"Statement":[

{

"Effect":"Allow",

"Principal":{ "AWS":"arn:aws:iam::823635665685:user/cognitive-service" },

"Action":[

"s3:GetObject",

"s3:PutObject",

"s3:PutObjectAcl"

],

"Resource":[ "arn:aws:s3:::example-bucket/folder1/*" ]

}

]

}

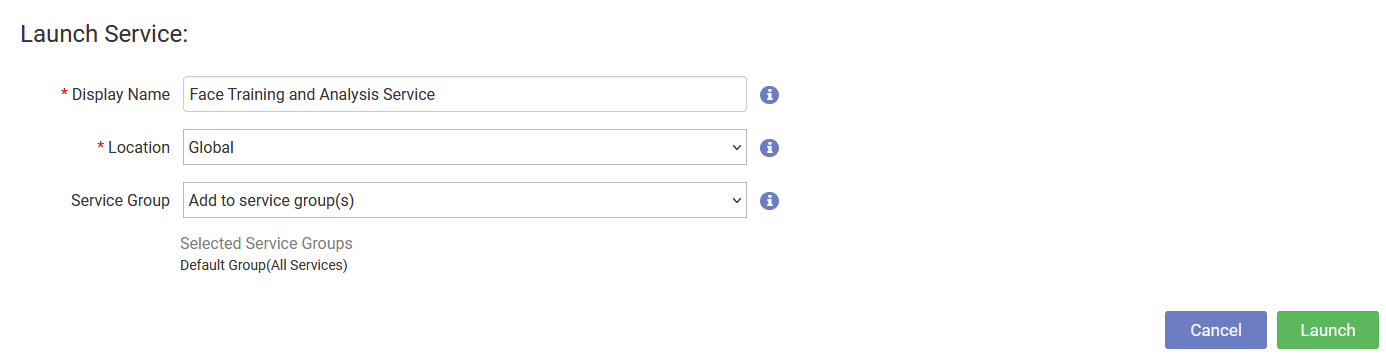

Launching service

Before you can start analyzing your video content with the cognitive service you need to launch a Face Training and Analysis Service from the store in the VidiNet dashboard.

Automatic service attachment

If you are running your VidiCore instance as a Vidicore-as-a-Service in VidiNet you have the option to automatically connect your service to your VidiCore by choosing it from the presented drop down during service launch.

Metadata field configuration will not be done automatically!

You must manually add the required metadata fields defined on this page.

Manual service attachment

When launching a media service in VidiNet you will get a ResourceDocument looking something like this:

<ResourceDocument xmlns="http://xml.vidispine.com/schema/vidispine">

<vidinet>

<url>vidinet://aaaaaaa-bbbb-cccc-eeee-fffffffffff:AAAAAAAAAAAAAAAAAAAA@aaaaaaa-bbbb-cccc-eeee-fffffffffff</url>

<endpoint>https://services.vidinet.nu</endpoint>

<type>COGNITIVE_SERVICE</type>

</vidinet>

</ResourceDocument>

Register the VidiNet service with your VidiCore instance by posting the ResourceDocument to the following API endpoint:

POST /API/resource/vidinetVerifying service attachment

To verify that your new service has been connected to your VidiCore instance you can send a GET request to the VidiNet resource endpoint.

GET /API/resource/vidinetYou will receive a response containing the names, the status, and an identifier for each VidiNet media service e.g. VX-10. Take note of the identifier for the Text-to-Speech service as we will use it later. You should also be able to see any VidiCore instances connected to your Speech-to-Text service in the VidiNet dashboard.

Adding required metadata fields in VidiCore

The analyzer resource needs to have a couple of extra metadata fields in VidiCore to store the metadata returned from the face training and analysis service. To see which fields that need to be added you can use the following API endpoint:

GET /API/resource/vidinet/{resourceId}/configuration/pre-check?displayData=trueThis will return a document describing all the configuration required by the resource. To then apply the configuration, you can use the following call:

PUT /API/resource/vidinet/{resourceId}/configurationFor the Face Training and Analysis service, this will create required service metadata fields, a new shape tag used for the resulting items created by the analysis, two custom job steps for merging and relabeling training material as well as a collection called FaceTrainingDataset which should be used to store all items used for training.

Amazon S3 bucket configuration

Before you can start a transcription job you need to allow a VidiNet IAM account read access to your S3 bucket. Attach the following bucket policy to your Amazon S3 bucket:

{

"Version":"2012-10-17",

"Statement":[

{

"Effect":"Allow",

"Principal":{ "AWS":"arn:aws:iam::823635665685:user/cognitive-service" },

"Action":"s3:GetObject",

"Resource":[ "arn:aws:s3:::{your-bucket-name}/*" ]

}

]

}

Training face detection model

In order to detect faces in our assets we first need to train the model on faces. In order to do this we have options:

Upload images containing faces representing persons to the system and set the

vcs_face_valuemetadata field on that item to the name of the person, we also need to set thevcs_face_isTrainingMaterialboolean metadata-field totrue.We can also use the Face Dataset analysis to extract faces and name tags from our video assets.

Imported and/or extracted faces then needs be moved to a collection specifically used for training, i.e. the FaceTrainingDataset in order to be included in the training job.

When we have training material in our collection, we can run a training job to get the model trained on the assets:

POST /API/collection/{collectionId}/train?resourceId={vidinet-resource-id}&callbackId={callback-resource-id}where {CollectionId} is the collection identifier of our training collection e.g. VX-46, {vidinet-resource-id} is the identifier of the VidiNet service that you previously added and {callback-resource-id} is the identifier for the callback resource we initially created e.g. VX-2. You will get a JobId returned and you can query VidiCore for the status of the job or check it in the VidiNet dashboard.

Once the training job has finished we have trained the DeepVA model and instructions are returned to VidiCore via the callback resource to update the state of all the training assets included in the training. We should be able to see each item and shapes status in the model by looking at the metadata fields vcs_face_method on both the items and shapes which should now have the value TRAINED, and the vcs_face_status on the shape which should tell us that the image shape is ACTIVE in the trained DeepVA-model.

Running an detection job

To start a detection job on a video item using the VCS Face analysis service, perform the following API call:

POST /API/item/{itemId}/analyze?resourceId={vidinet-resource-id}&callbackId={callback-resource-id}where {itemId} is the items identifier e.g. VX-46, {vidinet-resource-id} is the identifier of the VidiNet service that you previously added and {callback-resource-id} is the identifier for the callback resource we initially created e.g. VX-2. You will get a jobId returned and you can query VidiCore for the status of the job or check it in the VidiNet dashboard.

The analysis service will create callback instructions in the callback resource which will instruct VidiCore to import the metadata returned from the DeepVA analysis, it also imports any new fingerprinted faces found in the video (see details in section below). The callback instructions will also make sure that metadata for fingerpringted items that have been removed from the VidiCore system are not imported.

When the job has completed, the resulting metadata can be read from the item using for instance:

GET /API/item/{itemId}?content=metadataA small part of the result may look something like this:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<ItemDocument id="VX-108" xmlns="http://xml.vidispine.com/schema/vidispine">

<metadata>

<revision>VX-690,VX-785,VX-689,VX-692,VX-915,VX-693</revision>

<timespan start="225@PAL" end="226@PAL">

<group uuid="7c5919bf-faee-432e-91a3-ccddb5de000d" user="admin" timestamp="2021-08-18T10:03:15.417+02:00" change="VX-915">

<name>adu_face_DeepVAKeyframeAnalyzer</name>

<field uuid="74d2ecbd-1099-4f11-8ed8-2f7af8af6dbb" user="admin" timestamp="2021-08-18T10:03:15.417+02:00" change="VX-915">

<name>adu_analyzerId</name>

<value uuid="92b77072-a0b6-4259-bd9f-7c29d83eeae2" user="admin" timestamp="2021-08-18T10:03:15.417+02:00" change="VX-915">DeepVAKeyframeAnalyzer</value>

</field>

<field uuid="55c2c309-232e-4935-a28a-a7344f017a57" user="admin" timestamp="2021-08-18T10:03:15.417+02:00" change="VX-915">

<name>adu_analysisType</name>

<value uuid="24620159-795a-418b-9183-433816350554" user="admin" timestamp="2021-08-18T10:03:15.417+02:00" change="VX-915">face</value>

</field>

<field uuid="4b91d4cd-7e4c-4355-a2a9-de30492e3b81" user="admin" timestamp="2021-08-18T10:03:15.417+02:00" change="VX-915">

<name>adu_creationDate</name>

<value uuid="c3bd6be3-fff0-4ef1-b098-b490afe1de21" user="admin" timestamp="2021-08-18T10:03:15.417+02:00" change="VX-915">2021-08-18T08:00:46</value>

</field>

<field uuid="a3728461-c8a3-4e19-9d9f-9848a94142eb" user="admin" timestamp="2021-08-18T10:03:15.417+02:00" change="VX-915">

<name>adu_analysisMonitorId</name>

<value uuid="ec457f36-e8dc-4814-b7c9-36be28e1e888" user="admin" timestamp="2021-08-18T10:03:15.417+02:00" change="VX-915">2a3e5bed-fdac-4076-81b1-c6586e4c5c5d</value>

</field>

<field uuid="36d506e6-8147-4729-8f6c-cf2fd7cb8c07" user="admin" timestamp="2021-08-18T10:03:15.417+02:00" change="VX-915">

<name>adu_value</name>

<value uuid="127d195e-c581-4ea2-a0d0-e5d4d350d8f4" user="admin" timestamp="2021-08-18T10:03:15.417+02:00" change="VX-915">Steven Stevenson</value>

</field>

</group>

</timespan>

...

This tells us between which time span Steven Stevenson was detected in the item.

Fingerprints

The job will also create new items containing cropped frames of faces found in the video as well as time coded metadata on the source item referring to this entities which are faces yet unknown to the model. These are however fingerprinted by the analyzer and can be useful in many scenarios: An easy face detection with faces that can be named and merged together as you go. As a way of finding new faces in frames to generate source material from. Or as an easy way of merging the “Unknown” face with a trained person once it has been created. The items are returned in a collection FoundByFaceAnalysis which is created by the callback script in case it does not already exist. The items and the code in the source item references each other by a fingerprint id and you can update the name in the time code by just renaming or merging the item. Item of this type will consist of one image shape representing the thumbnail extracted by DeepVA and will have the item and shape metadata vcs_face_method set to INDEXED and the shape metadata field vcs_face_status set to ACTIVE. The name of the collection used for returned fingerprints can be specified using the configuration parameter UnknownCollectionName.

Indexed fingerprint shapes eg. the ones with shape metadata vcs_face_method set to INDEXED are not included in the training of your DeepVA model but are still used in the analysis to determine if the detected face has similarity to previously detected faces, and if so a new item should be created for the face or not.

When deleting an item of the type “unknown” the indexed entity will not be removed from the DeepVA database and will be ignored when reanalyzing items. This way you are able to disable faces being reimported that you don’t want to have recognized.

Configuration parameters

You can pass additional parameters to the service such as confidence threshold or which collection to save unknown identities in. You pass these parameters as query parameters to the VidiCore API. Parameters can be passed to both analysis and training.

Analysis

For instance, if we wanted to change the confidence threshold to 0.75 and save unknown identities to a the collection UnknownIdentityCollection, we could perform the following API call:

POST /API/item/{itemId}/analyze?resourceId={vidinet-resource-id}&jobmetadata=cognitive_service_ConfidenceThreshold=0.75&jobmetadata=cognitive_service_UnknownCollectionName=UnknownIdentityCollectionSee table below for all parameters that can be passed to the analysis and their default value. Parameter names must be prefixed by cognitive_service_.

Parameter name | Default value | Description |

|---|

Parameter name | Default value | Description |

|---|---|---|

| 0.5 | The threshold for face recognition confidence. |

| 300 | Maximum length of consolidated time span for identical detections. |

| true | If true, the analysis will look for faces not recognized as a trained identity and import them into the system as an unknown identity. |

| FoundByFaceAnalysis | Which collection any new unknown identities should be added to. |

| null | Which storage the new samples should be imported to. |

| 0.9 | The threshold for how confident it should be about face detection |

| 3 | The time in seconds between each keyframe that will be analyzed. Smaller number increases accuracy but also increases the cost. |

Training

For instance, if we wanted to set the minimum quality of the faces when training, we could perform the following API call:

POST /API/collection/{collectionId}/train?resourceId={vidinet-resource-id}&callbackId={callback-resource-id}&jobmetadata=cognitive_service_QualitySetting=MidSee table below for all parameters that can be passed to the training and their default value. Parameter names must be prefixed by cognitive_service_.

Parameter name | Default value | Description |

|---|---|---|

| Low | Minimum accepted quality of the faces to train. Supported quality settings are: |