Cognitive Video and Image Analysis Setup and Configuration

For this guide we assume that you have a VidiCore instance running, either as a VaaS running inside VidiNet or as a standalone VidiCore instance. You should also have an Amazon S3 storage connected to your VidiCore with some imported image and/or video encoded in a supported format. See Amazon Rekognition FAQ for supported formats.

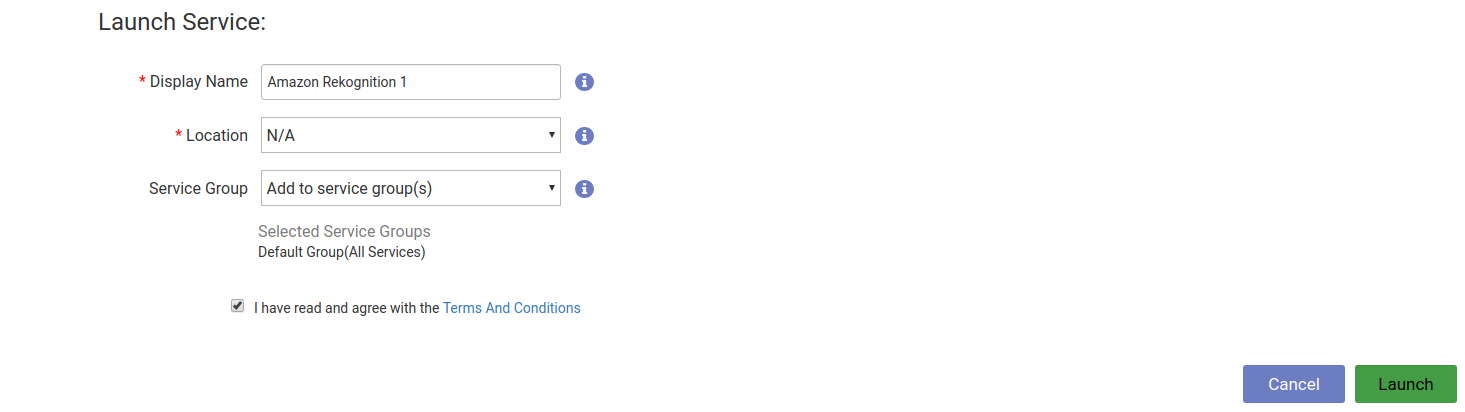

Launching service

Before you can start analyzing your content, you need to launch a Video and image Analysis service from the store in the VidiNet dashboard.

Automatic service attachment

If you are running your VidiCore instance as a Vidicore-as-a-Service in VidiNet you have the option to automatically connect your service to your VidiCore by choosing it from the presented drop down during service launch.

Manual service attachment

When launching a media service in VidiNet you will get a ResourceDocument looking something like this:

<ResourceDocument xmlns="http://xml.vidispine.com/schema/vidispine">

<vidinet>

<url>vidinet://aaaaaaa-bbbb-cccc-eeee-fffffffffff:AAAAAAAAAAAAAAAAAAAA@aaaaaaa-bbbb-cccc-eeee-fffffffffff</url>

<endpoint>https://services.vidinet.nu</endpoint>

<type>COGNITIVE_SERVICE</type>

</vidinet>

</ResourceDocument>Register the VidiNet service with your VidiCore instance by posting the ResourceDocument to the following API endpoint:

POST /API/resource/vidinetVerifying service attachment

To verify that your new service has been connected to your VidiCore instance you can send a GET request to the VidiNet resource endpoint.

GET /API/resource/vidinetYou will receive a response containing the names, the status, and an identifier for each VidiNet media service e.g. VX-10. Take note of the identifier for the Video and Image Analysis service as we will use it later. You should also be able to see any VidiCore instances connected to your Video and Image Analysis service in the VidiNet dashboard.

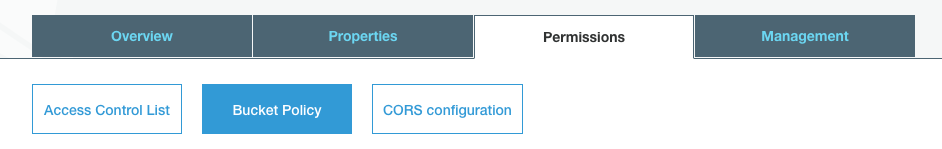

Amazon S3 bucket configuration

Before you can start a detection job, you need to allow the VidiNet IAM account read access to your S3 bucket. Attach the following bucket policy to your Amazon S3 bucket:

{

"Version":"2012-10-17",

"Statement":[

{

"Effect":"Allow",

"Principal":{

"AWS":"arn:aws:iam::823635665685:user/cognitive-service"

},

"Action":"s3:GetObject",

"Resource":[

"arn:aws:s3:::{your-bucket-name}/*"

]

}

]

}To use service with S3 Bucket that has KMS SSE encryption enabled, the KMS key policy needs to have a statement such as:

{

"Sid": "Enable VidiNet Decrypt",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::823635665685:user/cognitive-service"

},

"Action": "kms:Decrypt",

"Resource": "*"

}The resource can be fine grained to the specific bucket that you are using with the Cognitive Service.

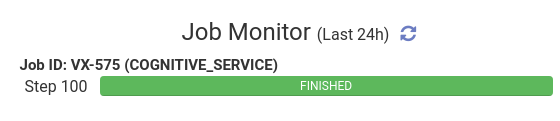

Running an detection job

To start a detection job on an item using the Video and Image Analysis service we just registered, perform the following API call:

POST /API/item/{itemId}/analyze?resourceId={vidinet-resource-id}where {itemId} is the item's identifier e.g. VX-46 and {vidinet-resource-id} is the identifier of the VidiNet service that you previously added e.g. VX-10. You will get a JobId in the reponse which you can use to query the VidiCore API Job endpoint /API/job for the status of the job or check it in the VidiNet dashboard.

When the job has been completed, the resulting metadata can be read from the item using for instance:

GET /API/item/{itemId}/metadataA small part of the result may look something like this:

{

"name": "adu_av_analyzedValue",

"field": [

{

"name": "adu_av_value",

"value": [

{

"value": "Tree",

"uuid": "55abc877-7757-457d-969e-7e4ab21fa61f",

"user": "admin",

"timestamp": "2020-01-01T00:00:00.000+0000",

"change": "VX-92"

}

]

},

{

"name": "adu_av_confidence",

"value": [

{

"value": "0.98",

"uuid": "5a2a7d71-de2c-41c1-a6c4-f2376fdd2728",

"user": "admin",

"timestamp": "2020-01-01T00:00:00.000+0000",

"change": "VX-92"

}

]

}

]

}This tells us that a tree was detected with 98% confidence in the item.

Configuration parameters

You can pass additional parameters to the service such as pre-trained model uses or confidence threshold. You pass these parameters as query parameters to the VidiCore API. For instance, if we wanted to do Content Moderation-detection and use a lower confidence threshold for when values are included in metadata, we could perform the following API call:

POST /API/item/{itemId}/analyze?resourceId={vidinet-resource-id}&jobmetadata=cognitive_service_AnalysisType=Moderation&jobmetadata=cognitive_service_ConfidenceThreshold=0.5See table below for all parameters that can be passed to the Video and Image Analysis service and their default values. Parameter names must be prefixed by cognitive_service_.

Parameter name | Default value | Description |

|---|---|---|

AnalysisType | Label | A string that sets the type of pre-trained model used for the analysis. Video models supported are: The same models are supported for images as well as: |

ConfidenceThreshold | 0.9 | The confidence required for a value to be included in the resulting metadata. (0.0 - 1.0) |

TimespanConsolidation | true | If true, merge identical detections in quick succession into a single metadata entry. Only applies to video. |

BackTobackMaxLengthInMilliseconds | 1500 | Maximum length of consolidated time span for identical detections. Only relevant when TimeSpanConsolidation is true. Only applies to video. |