Face and Label Extractor Powered by DeepVA Setup and Configuration

For this guide we assume that you have a VidiCore instance running, either as a VaaS running inside VidiNet or as a standalone VidiCore instance. You should also have an Amazon S3 storage connected to your VidiCore with some video content encoded as mp4.

Configuring a callback resource

To be able to run the Face and Label Extractor and extract frames and lower third name tags from your video content you need to assign a S3-storage that can be used as a callback location for VidiCore to use for resulting data returned by the analysis.

The result from the analysis service will consist of images and metadata that will be temporarily stored in the callback resource together with accompanying JavaScript job instructions for VidiCore to consume. As soon as the callback instructions has been successfully executed, all files related to the job is removed from the callback resource

This resource could either be a folder in an existing bucket or a completely new bucket, assigned only for this purpose.

Important! Do not use a folder within a storage which is already used by VidiCore as a storage resource, this to avoid unnecessary scanning of the files written to the callback storage.

Example:

POST /API/resource<ResourceDocument xmlns="http://xml.vidispine.com/schema/vidispine">

<callback>

<uri>s3://name:pass@example-bucket/folder1/</uri>

</callback>

</ResourceDocument>

This will return a resource-id for the callback resource which is to be used when running the analysis call.

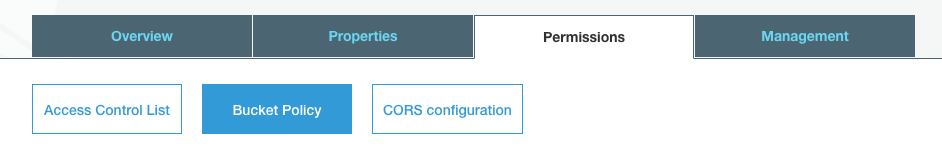

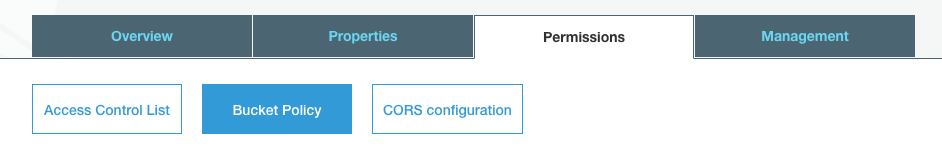

The S3-resource must also be configured to allow the cognitive service to put objects in the bucket. Attach the following bucket policy to the S3-resource

{

"Version":"2012-10-17",

"Statement":[

{

"Effect":"Allow",

"Principal":{

"AWS":"arn:aws:iam::823635665685:user/cognitive-service"

},

"Action":[

"s3:GetObject",

"s3:PutObject",

"s3:PutObjectAcl"

],

"Resource":[ "arn:aws:s3:::example-bucket/folder1/*" ]

}

]

}

To use service with S3 Bucket that has KMS SSE encryption enabled, the KMS key policy needs to have a statement such as:

{

"Sid": "Enable VidiNet Decrypt",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::823635665685:user/cognitive-service"

},

"Action": "kms:Decrypt",

"Resource": "*"

}The resource can be fine grained to the specific bucket that you are using with the Cognitive Service.

Launching service

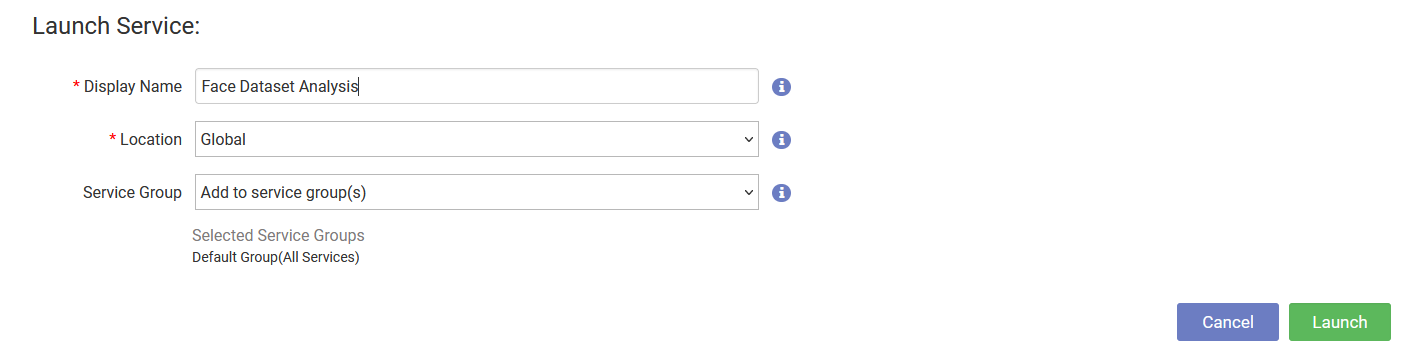

Before you can start analyzing your video content, you need to launch a Face and Label Extractor service from the store in the VidiNet dashboard.

Automatic service attachment

If you are running your VidiCore instance as a Vidicore-as-a-Service in VidiNet you have the option to automatically connect your service to your VidiCore by choosing it from the presented drop down during service launch.

Metadata field configuration will not be done automatically!

You must manually add the required metadata fields defined on this page.

Manual service attachment

When launching a media service in VidiNet you will get a ResourceDocument looking something like this:

<ResourceDocument xmlns="http://xml.vidispine.com/schema/vidispine">

<vidinet>

<url>vidinet://aaaaaaa-bbbb-cccc-eeee-fffffffffff:AAAAAAAAAAAAAAAAAAAA@aaaaaaa-bbbb-cccc-eeee-fffffffffff</url>

<endpoint>https://services.vidinet.nu</endpoint>

<type>COGNITIVE_SERVICE</type>

</vidinet>

</ResourceDocument>

Register the VidiNet service with your VidiCore instance by posting the ResourceDocument to the following API endpoint:

POST /API/resource/vidinetVerifying service attachment

To verify that your new service has been connected to your VidiCore instance you can send a GET request to the vidinet resource endpoint.

GET /API/resource/vidinet

You will receive a response containing the names, the status, and an identifier for each VidiNet media service e.g. VX-10. Take note of the identifier for the Text-to-Speech service as we will use it later. You should also be able to see any VidiCore instances connected to your Speech-to-Text service in the VidiNet dashboard.

Adding required metadata fields in VidiCore

The analyzer resource needs to have a couple of extra metadata fields in VidiCore to store the metadata returned from the face and label extraction service. To see which fields that needs to be added you can use the following API endpoint:

GET /API/resource/vidinet/{resourceId}/configuration/pre-check?displayData=trueThis will give return a document describing all the configuration required by the resource. To then apply the configuration, you can use the following call:

PUT /API/resource/vidinet/{resourceId}/configurationFor the Face and Label Extractor this will create required service metadata fields as well as a new shape tag used for the resulting items created by the analysis

Amazon S3 bucket configuration

Before you can start a face and label extraction job you need to allow a VidiNet IAM account read access to your S3 bucket. Attach the following bucket policy to your Amazon S3 bucket:

{

"Version":"2012-10-17",

"Statement":[

{

"Effect":"Allow",

"Principal":{

"AWS":"arn:aws:iam::823635665685:user/cognitive-service"

},

"Action":"s3:GetObject",

"Resource":[ "arn:aws:s3:::{your-bucket-name}/*" ]

}

]

}

Running a Face and Label Extractor job

To start an analysis job on an item using the Face and Label Extractor service we just registered, perform the following API call to your VidiCore instance:

POST /API/item/{itemId}/analyze?resourceId={vidinet-resource-id}&callbackId={callback-resource-id}where {itemId} is the items identifier e.g. VX-46, {vidinet-resource-id} is the identifier of the VidiNet service that you previously added e.g. VX-10 and {callback-resource-id} is the identifier for the callback resource we initially created e.g. VX-2. You will get a JobId returned. You can query VidiCore for the status of the job or check it in the VidiNet dashboard.

The analysis service will create callback instructions in the callback resource which will instruct VidiCore to import the new images and metadata returned from the DeepVA analysis. The callback instructions will also instruct VidiCore to store the result in a designated collection, by default this collection has the metadata field title set to FoundByFaceExtractor. Should no collection with this name exist, VidiCore is instructed to create it.

The name of the collection can be configured by passing additional job metadata to the anaysis job. See description in section Configuration Parameters

Making a search for the collection with title FoundByFaceExtractor

PUT /API/collection

<ItemSearchDocument xmlns="http://xml.vidispine.com/schema/vidispine">

<field>

<name>title</name>

<value>FoundByFaceExtractor</value>

</field>

</ItemSearchDocument>

The result may look something like this:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<CollectionListDocument xmlns="http://xml.vidispine.com/schema/vidispine">

<hits>1</hits>

<collection>

<loc>https://my-vidicore/API/collection/VX-1</loc>

<id>VX-1</id>

<name>FoundByFaceExtractor</name>

</collection>

</CollectionListDocument>

Looking at the content in the collection:

GET /API/collection/VX-1/item?content=metadata<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<ItemListDocument xmlns="http://xml.vidispine.com/schema/vidispine">

<hits>10</hits>

<item id="VX-38">

<metadata>

<revision>VX-236,VX-336,VX-225,VX-275,VX-277</revision>

<timespan start="-INF" end="+INF">

...

<field uuid="8ed72f93-287b-4f8b-96e4-2f3dff16e461" user="admin" timestamp="2021-06-21T13:34:42.867Z" change="VX-225">

<name>vcs_face_isTrainingMaterial</name>

<value uuid="4a0ea9ee-5f5b-4e96-a523-401a1906cfe1" user="admin" timestamp="2021-06-21T13:34:42.867Z" change="VX-225">true</value>

</field>

<field uuid="af0e4bd0-07f5-4299-8ac9-989336c6b9e6" user="admin" timestamp="2021-06-21T13:34:42.867Z" change="VX-225">

<name>vcs_face_value</name>

<value uuid="0ab60010-aa37-4c4e-9652-559e59e9c5de" user="admin" timestamp="2021-06-21T13:34:42.867Z" change="VX-225">Frank Skinner</value>

</field>

<field uuid="eedf108d-5180-41a2-84e6-0346f9c55593" user="system" timestamp="2021-06-21T13:34:59.197Z" change="VX-277">

<name>itemId</name>

<value uuid="a9d04a5d-da88-44a6-ba7c-51f39277608e" user="system" timestamp="2021-06-21T13:34:59.197Z" change="VX-277">VX-38</value>

</field>

...

We can see that the new items that have been imported has the vcs_face_value field set to the nametag extracted from the video. And the vcs_face_isTrainingMaterial indicates that this item can be used in training of face recognition models.

Configuration parameters

You can pass additional parameters to the service such as which collection the training items should be added to or which storage the new shapes should be imported to. You pass these parameters as query parameters to the VidiCore API. For instance, If we wanted to import the new training items to the collection ExampleCollection and import the new shapes to storage VX-55, we could perform the following API call:

POST /API/item/{itemId}/analyze?resourceId={vidinet-resource-id}&callbackId={callback-resource-id}&jobmetadata=cognitive_service_TrainingDataCollectionName=ExampleCollection&jobmetadata=cognitive_service_DestinationStorageId=VX-55See table below for all parameters that can be passed to the Face and Label Extractor service and their default values. Parameter names must be prefixed by cognitive_service_.

Parameter name | Default value | Description |

|---|

Parameter name | Default value | Description |

|---|---|---|

| Found by Face Extractor | Which collection the new training items should be added to. |

| null | Which storage the new samples should be imported to. |

| false | If set to true, the dataset is deleted from DeepVA after creation. This will cause any faces extracted to be imported as new items. |

| Low | Minimum accepted quality of the faces found. Supported quality settings are: |